Matched market testing (MMT) has been the gold standard for proving incrementality for years. It’s simple in theory: split your markets, run your campaign, measure the lift. But in practice? It’s messy, slow, and often misleading.

MMT breaks down when geos aren’t truly comparable. It struggles with small sample sizes, local disruptions, and long timelines that just don’t work for agile teams. If you’ve ever launched a geo-test only to get inconclusive results, or worse, made a call based on flawed data, you’re not alone.

In this post, we’ll discuss the biggest problems with traditional matched market testing and show how newer, model-based approaches offer faster, more reliable answers for measuring true incrementality.

Key highlights:

- Matched market testing (MMT) is a geo-based method for measuring incrementality by comparing performance between test and control markets. It’s privacy-safe and easy to grasp, but difficult to execute well.

- Traditional MMT has major limitations, including mismatched markets, external disruptions, long timelines, and high costs, which make it unreliable and hard to scale across modern media strategies.

- Modern marketing measurement solutions solve these challenges with synthetic control models and real-time data. Instead of static geo tests, you get dynamic, AI-powered baselines that improve speed, accuracy, and adaptability.

- Post-MMT measurement platforms like Keen let you run always-on tests, measure across all channels, and forecast outcomes, turning incrementality into a repeatable, systematized capability.

What is matched market testing?

Matched market testing (MMT) is a method used to measure the incremental impact of marketing by comparing performance between two or more similar markets:

- Exposed to the campaign (test)

- Not exposed (control)

It’s a form of geo-based experimentation that doesn’t require user-level data, making it popular as we enter the cookieless marketing era.

The idea is simple: if the test market sees higher sales (or other marketing KPIs) compared to the control, the difference is attributed to the campaign. You can use this method to validate:

- Marketing effectiveness

- Pricing strategies

- Promotional offers

- Household penetration

Matched market testing is still widely used today because it’s conceptually straightforward and doesn’t require advanced analytics. But as we’ll explore next, that simplicity can come at a cost.

Read more: Incrementality testing in marketing

How does a matched market test work?

Executing matched market testing involves several moving parts. To implement a matched market test, use the following steps:

- Identify your test and control markets: Use historical data to select two or more markets that behave similarly, based on sales trends, demographics, competitive activity, and media exposure.

- Launch in the market selected for the campaign: Keep the control market “dark” during the test period, meaning no exposure to the campaign.

- Run the test campaign for a fixed duration: Maintain the campaign in the test market for a clearly defined period (for example, 2–6 weeks), long enough to generate a measurable impact. The control market should remain unexposed for the same duration.

- Track marketing performance metrics across both markets: Measure outcomes like sales, conversions, or traffic over the course of the campaign. Timing, spend levels, and media mix should be held as consistently as possible across markets.

- Compare the lift between markets: Once the campaign ends, calculate the difference in performance. The assumption is that the marketing activity drives any change in the test market beyond what’s seen in the control.

- Analyze and interpret the marketing test results: If the test market outperforms, treat the difference as an incremental lift. But accurately analyzing the results depends heavily on how well you matched the markets and how well the test was executed.

Even small deviations between markets or external factors, like local news or weather, can distort results, which is where many MMTs fall short.

Common challenges of matched market test methodology

While matched market test methodology sounds simple, the execution is anything but. Here are the most common MMT pitfalls that lead to unreliable or inconclusive results:

1. Poorly matched markets introduce bias

No two markets are perfectly identical. Differences in demographics, competitive activity, or even local events can skew results. Even small mismatches can create artificial lift or hide the true impact of your campaign, making your test read more like a guess than a measurement.

A study by Facebook and researchers at the Kellogg School of Management found that when running repeated simulations on geo-paired markets, the estimated lift from a matched market test varied wildly, from –2% to +80%, even though the true lift was about 33%. In other words, 95% of the time, the outcome could be far off the real effect purely due to noise.

Read more: Validate your marketing expertise with data

2. Small sample sizes reduce statistical power

Matched market tests often use just a handful of regions. Such a small sample size makes it hard to detect real effects with confidence, especially if your marketing budget allocation is spread thin.

The catch: even a bigger sample size isn’t that better. A study by Lewis and Rao analyzed 25 real-world digital ad experiments, each with over 500,000 participants, and found that most confidence intervals for ROAS exceeded 100%, with the narrowest still above 50%.

The result? Noisy data, wide confidence intervals, and limited trust in your findings.

3. External factors pollute the matched market test

You can’t control the weather, local news cycles, or what your competitors are doing in a specific market. These uncontrollable factors influence performance in ways that have nothing to do with your test campaign, leading to false positives or negatives.

According to the IAB/MRC Retail Media Measurement Guidelines, it’s “impossible to fully match” markets on all relevant factors. Unexpected price promotions, in-store displays, digital coupons, or competitive moves in one market can distort results. Yet they’re often outside the scope of the test design.

That means even a carefully structured geo-test can be thrown off by local disruptions you can’t predict or prevent.

Read more: Causality in marketing

4. Long test run times and high costs are unsustainable

Because you’re testing in live markets, you need a clean, uninterrupted campaign period—often four to eight weeks or more. That means long timelines, higher media spend, and added operational complexity just to run one test.

5. MMT is hard to replicate or scale

A single matched market test gives you results for that specific moment, in that specific place. But those results don’t necessarily apply to other markets, different marketing channel mixes, or future campaigns. Replicating the test at scale is expensive and often impractical.

How AI-powered measurement solutions fix traditional marketing tests

Advances in modeling (like Bayesian MMM), data processing, and automation have introduced smarter, faster ways to measure incrementality, without relying on manual geo-matches or long campaign cycles. Below are five ways modern solutions solve the core problems of traditional match market tests.

1. Use synthetic control models instead of manually matched geos

Synthetic control modeling is a statistical technique that builds a weighted combination of multiple control units to closely resemble the test group, without relying on human guesswork. Rather than choosing “similar” cities, the model constructs a virtual control that mirrors the behavior of your test market based on historical patterns.

As a result, you solve:

- Bias from mismatched markets

- Limited control options when geos don’t align

- Overreliance on manual judgment

2. Shift from campaign-based testing to real-time incrementality modeling

Modern incrementality platforms ingest and process campaign data continuously. Instead of waiting for a test to run its full course, you can detect early lift signals, course-correct during advertising flights, and get actionable results much faster. You no longer struggle with:

- Long test durations (4-8 weeks or more)

- Delayed insights that miss the campaign window

- Missed opportunities for in-flight optimization

3. Train models on large, cross-brand datasets to improve reliability

Unlike traditional geo tests that rely on a handful of regions, AI-driven marketing mix modeling draws from billions of data points across industries, channels, and campaign types. The model learns what normal looks like in various contexts, reducing the influence of outliers or short-term noise.

Keen’s Marketing Elasticity Engine (MEE) is an example of such a trained and advanced learning model.

By using AI-powered MMM models like Keen, you get:

- Stable and reliable results

- Tests that never break down due to random variation

- Confidence in marketing decision-making

4. Expand match market testing across all channels

Modern marketing tools aren’t limited to geo-based execution. You can measure incrementality across digital channels (social, search, display, CTV, retail media) by using modeled baselines and synthetic controls—no geographic holdouts required. Such tools solve:

- Inability to test non-local or always-on channels

- Blind spots in performance across your media mix

- Difficulty proving lift from digital or blended campaigns

5. Build always-on experimentation into your marketing strategies

With model-based systems, testing isn’t a one-time effort; it becomes part of your ongoing marketing measurement strategy. You can continuously test new channels, creative, budget levels, and audience segments without resetting the process each time.

The best part: you don’t have to wait weeks and months to get the results. Marketing predictive analytics makes it possible in a single click.

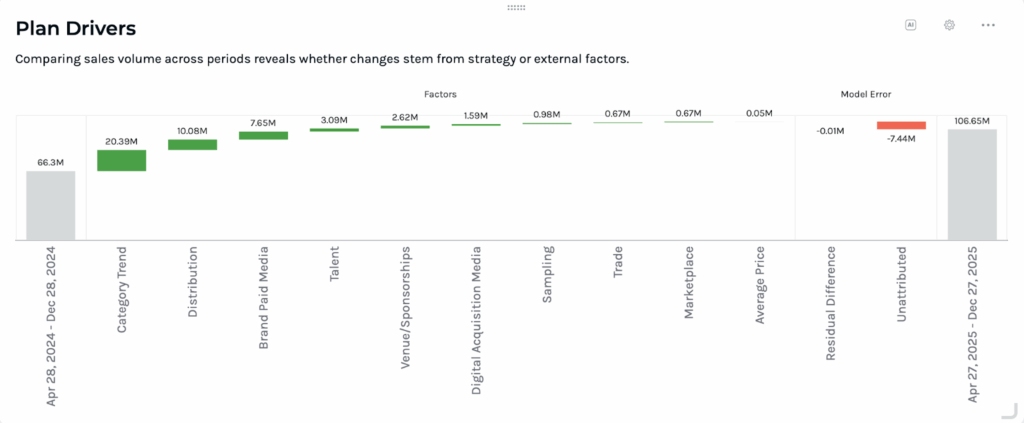

For example, using Keen’s scenario-based marketing planning, you can test different ad spend allocation, channel mix, and much more, before you ever spend a single dollar:

Read more: An always-on marketing approach builds awareness and stimulates sales

Replace match market testing with Keen

Moving beyond traditional matched market testing requires more than just faster tools. It demands a fundamentally smarter approach to incrementality.

Instead of relying on manual geo matching, limited test windows, and noisy results, modern marketing measurement systems apply continuous modeling and real-world data to deliver accurate, actionable insights.

Keen’s marketing measurement software replaces outdated testing methods with a model-based system that’s faster, more precise, and built to scale. Here’s what a post-MMT measurement system should include—and how Keen delivers on each point:

- Synthetic control modeling instead of geo matching: Statistical models construct counterfactuals that reflect true baseline performance—no need to manually identify “similar” markets.

- Real-time data ingestion for faster readouts: Models update continuously, delivering early lift signals without waiting weeks. You can now make mid-flight adjustments and quicker decisions.

- Incrementality across every channel: Measure impact beyond traditional formats—including CTV, paid social, search, and retail media—so you can see what’s truly driving performance across your planned media mix.

- Sales forecasting and scenario planning: Simulate how changes in spend or channel allocation will affect outcomes.

If match market testing was a good first step, Keen is the leap forward. Post-MMT measurement is faster, more flexible, and far more actionable, and platforms like Keen are making it possible.

Start a free trial to see how you can skip long and expensive match market tests with Keen.

Frequently asked questions

What is a market test in marketing?

A market test is a controlled experiment when you launch a campaign, promotion, or product in a limited region to see how it performs before scaling. It’s a way to get real-world results without committing the full budget upfront.

Matched market testing is one form of market testing. It compares results between a test market (where the campaign runs) and a control market (with no exposure) to measure incremental impact.

How can matched market testing be important to brands?

Matched market testing helps brands isolate the actual impact of their marketing activities, especially in privacy-first environments where user-level tracking isn’t possible. It’s particularly important because it helps you:

- Measure causal lift by comparing campaign-exposed regions to unexposed ones

- Validate large-scale investments like TV, OOH, or retail promotions before rolling them out nationally

- Fine-tune brand-level messaging when A/B testing isn’t feasible

- Build confidence among finance and leadership teams by grounding results in observable, market-level performance

What are the benefits of matched market testing?

While matched market testing comes with limitations, it still offers unique benefits when executed properly, as it is:

- Privacy-compliant: It doesn’t require user-level data, making it future-proof in a cookieless world.

- Conceptually simple: MMT is easy to explain and understand across teams, including finance and strategy.

- Enterprise-friendly: It works well for large-scale, regionally isolated campaigns where holdouts are practical.

- Reflective of live market: It can validate pricing, promotions, or creative strategies in real-world market conditions before scaling.

How can I choose similar markets for the test campaign?

Choosing well-matched markets is one of the most difficult steps of MMT. To select control market:

- Use historical performance data: Look for similar trends in sales, revenue, or conversion rates.

- Match on demographics and geography: Consider income levels, population size, climate, and urban vs. rural characteristics.

- Check for media consumption and brand awareness parity: Ensure both markets engage similarly with the channels being tested.

- Control for external noise: Avoid markets with recent events, regulatory changes, or competitor activity that could skew results.

- Use statistical tools: Clustering, matching scores, or synthetic control models can improve match quality beyond manual judgment.

What are some use cases of a successful match market test?

Matched market testing works best when you can isolate the campaign and maintain consistency across markets. Successful examples of a matched market test include:

- TV or CTV test campaigns: Run in one DMA and compare results to a control DMA with no exposure.

- Retail promotions: Test a limited-time in-store offer in one region and compare lift to stores in a similar region without the promo.

- Pricing tests: Launch a new pricing model in a test city and assess impact compared to a control city with current pricing.

- Creative campaign effectiveness tests: Run new brand messaging in one market and measure brand lift or conversions against a control.

- Channel mix tests: Try a different media mix (for example, more digital, less TV) in the test market and evaluate its performance versus the baseline.